In my previous post, I talked about the FQDN filtering feature which is one of the new Add-Ons of the Advanced firewall.

In this Part 3 of this multi part blog series, let’s focus on the latest feature, the Distributed IDS/IPS which is part of the newly announced NSX Advanced Firewall for VMware Cloud on AWS.

Introduction to Distributed IPS/IDS

With NSX Distributed IDS/ IPS, customers gain protection against attempts to exploit vulnerabilities in workloads running on VMware Cloud on AWS.

Distributed IDS/ IPS is an application-aware deep packet inspection engine that can examine and protect traffic inside the SDDC. Customers can detect and prevent lateral threat movement within the SDDC using the intrinsic security capabilities of Distributed IDS/IPS.

Like DFW, Distributed IDS/IPS is built into the hypervisor and inspection can be performed for all traffic coming into or leaving the VM. Since the inspection is performed on all the hypervisor hosts in a distributed manner, there is no single inspection bottleneck that chokes the traffic flow.

Enabling Distributed IDS/IPS

First thing we will do is to activate and configure the Distributed IDS/IPS feature in VMC on AWS SDDC.

If you don’t have already activated the NSX Advanced Firewall add-on, please do so otherwise you will get this message:

Remember in my first Post of this series, I already have shown you how to activate the NSX Advanced Firewall Add On for VMware Cloud on AWS.

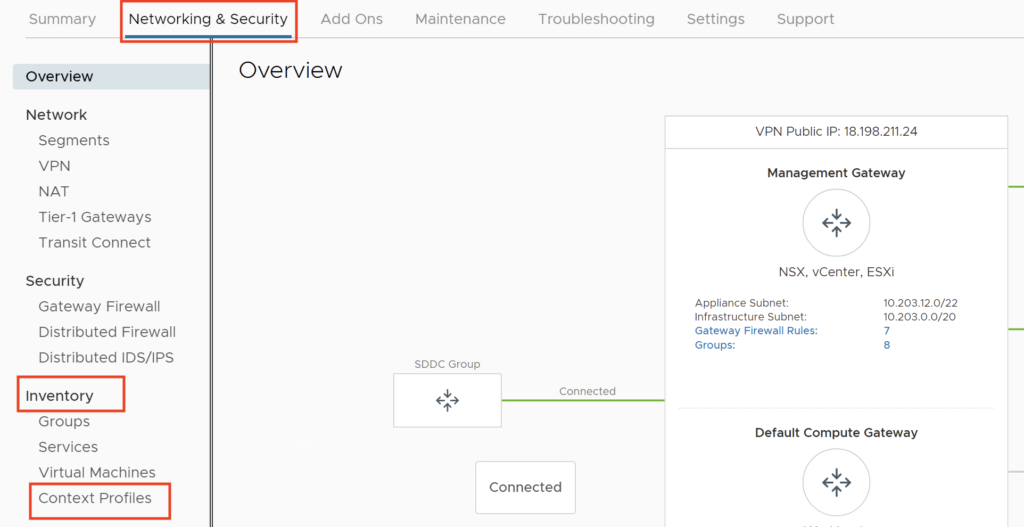

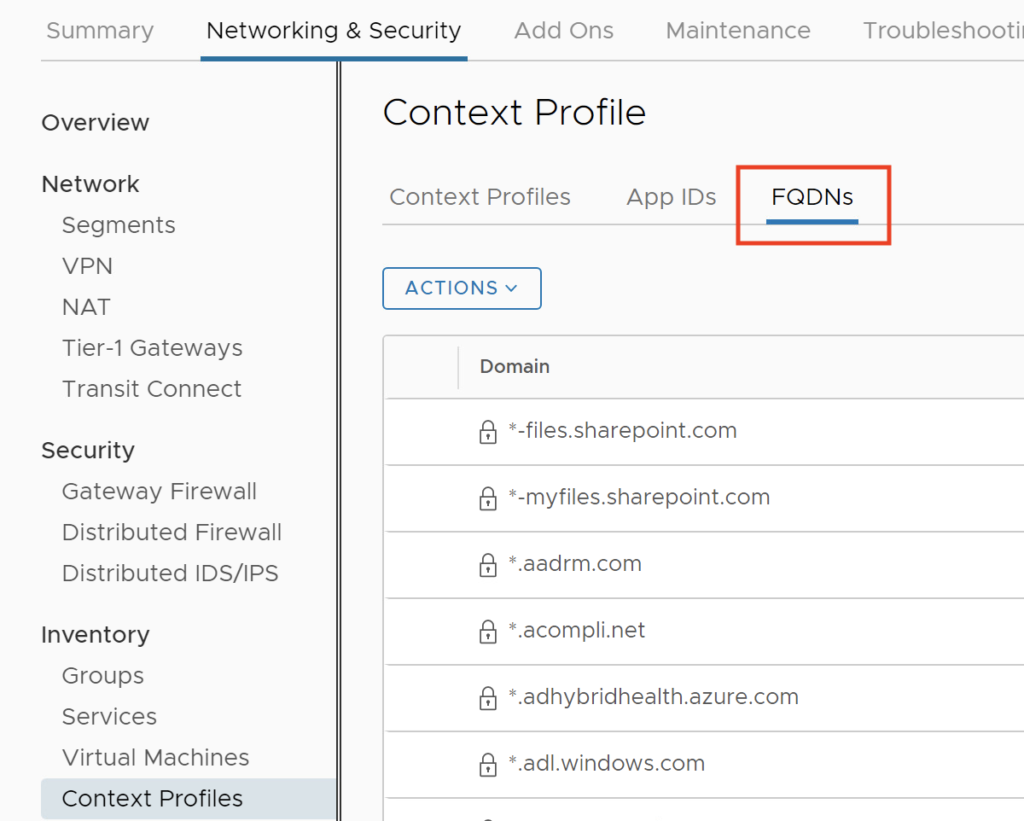

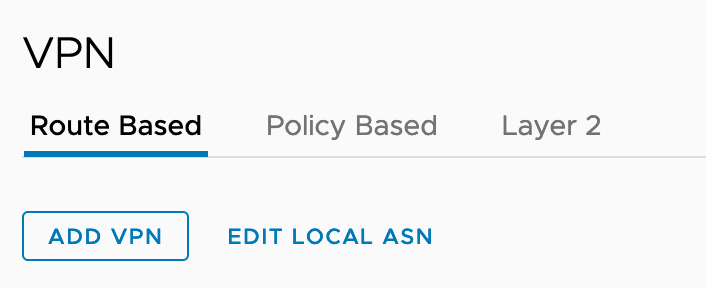

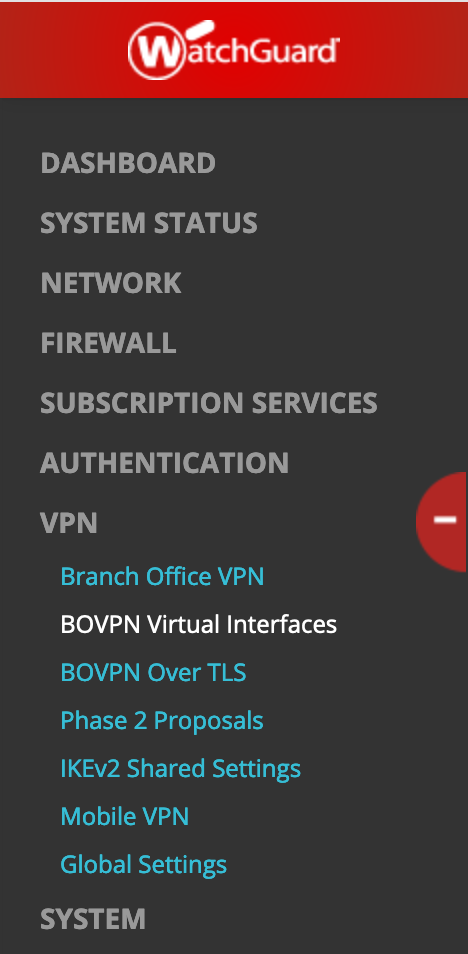

Once you have activated the add-on feature, in the browser, Click the Networking and Security tab. Click Distributed IDS/IPS, located in the Security Section.

The IDS/IPS is disabled by default so you have to enable it for the cluster. Here I have only one cluster.

Just move the slider to enable the feature and confirm that you want to enable the cluster and you are ready to test it!

Once it’s enabled you can choose to regularly update the Signatures by selecting the Auto Update new versions button.

NSX Distributed IDS/IPS utilizes the latest threat signature sets and anomaly detection algorithms to identify attempts at exploiting vulnerabilities in applications. It is integrated with the NSX Threat Intelligence Cloud Service to always remain up to date on the latest threats identified on the Internet.

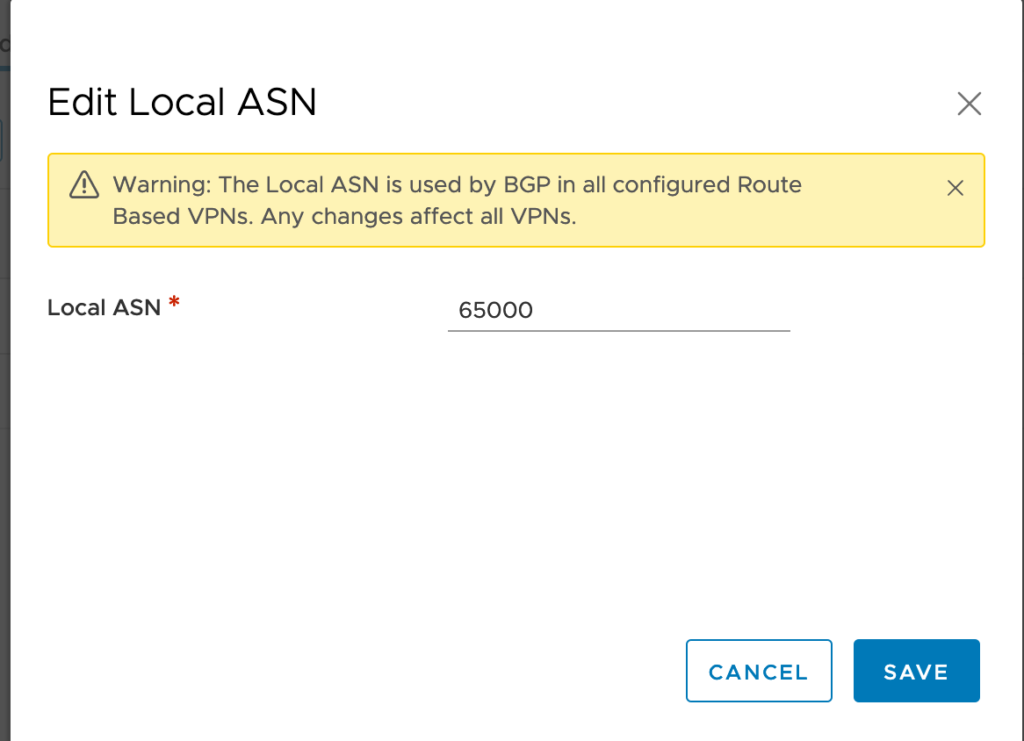

You can check the other versions that have been presents in the environment by clicking the View and change versions link.

This is launching a new window with historical details. Here we can see that the first Default Signature was installed Jun 17th, 2021 and additional signatures has been pushed Oct 20th and Nov 12nd.

We are gonna go ahead and be using the latest versions.

If you don’t have access to Internet from your NSX Manager, you also download the IDS/IPS signatures from the Network Threat Intelligence services page and be able to upload them manually.

Now it’s time to finish configuring the feature and launch some real test attacks by leveraging both Kali Linux and the infection Monkey tooling to simulate some attacks!

Configuring Distributed IDS/IPS profile & rule

Create a Profile for IDS/IPS

In this section, I will create a default profile to use with an IDS/IPS rule.

NB: We can configure up to 25 profiles.

Under the Profiles tab under Distributed IDS/IPS within the Security section, I have clicked ADD PROFILE and create the ChrisIDSProfile profile:

I have accepted the default settings but you can customise the profile to meet your requirements. You can for instance only select the Intrusion attack with a level of severity to Critical or High and Critical only.

One thing you can do is to tweak it by selecting specific CVSS or Attack types.

I clicked save to finish creating the Profile.

We can see that the profile has been successfully created.

After a few seconds it appears in green:

Create a Policy with rules for IDS/IPS

Now let’s create a Policy.

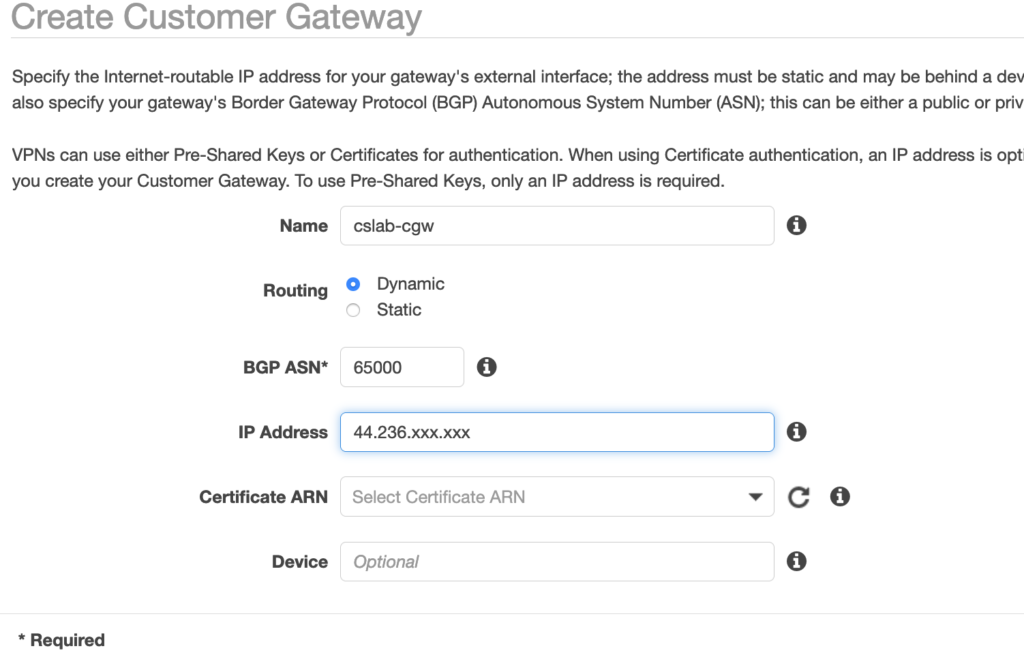

For that, I need to go to the Rules tab and add a specific IDS/IPS Policy called ChrisIDS-Policy.

I have selected the check box next to the name of the policy, then click Add Rule.

To finish the configuration I have to select the profile previously created.

I have also changed the source from Any to my SDDC subnets.

Please note that I leave the Sources and Services columns to Any and the Applied to field set to DFW.

I have also left the Mode to Detect Only. In Production it’s better to change this setting and switch to Detect & Prevent.

Now that I am done with the setup, I just need to click Publish.

Now it’s time to go for some tests of attacks and exploits.

Testing the IDS/IPS

In order to test the IDS/IPS feature, I have used my best security scanning tools to generate some attacks and try to exploit some vulnerabilities in one special server.

Basically I will launch the exploits on a OWASP Web Application server which is a test server with vulnerabilities that I have deployed in my SDDC. In a nutshell OWASP stands for The Open Web Application Security Project® and it is a nonprofit foundation that works to improve the security of software. It’s a very good way to test the level of security of your environment.

This OWASP server is going to be the target for all the vulnerability scanning coming from my two different tools.

Scanning tools

First one is the Kali Linux distribution in a Virtual Machine which have a multitude of security tools preinstalled in it. I love it!

The second one is the Infection Monkey virtual appliance from Guardicore which is a platform with a graphical interface that you can leverage to launch the exploits.

Infection Monkey is an open source breach and attack simulation (BAS) platform that allows organisations to discover security gaps and fix them. You can Simply infect a random machine with the Infection Monkey and automatically discover your security risks. Test for different scenarios – credential theft, compromised machines and other security flaws.

Deploying Kali Linux

It’s a simple process as you can install it from a ISO CD or download a virtual image directly from here.

I have choose to install it with the ISO CD as it gives more flexibility to tweak your VM settings.

Once the VM is deployed there is nothing more to do.

Deploying Monkey Island VM

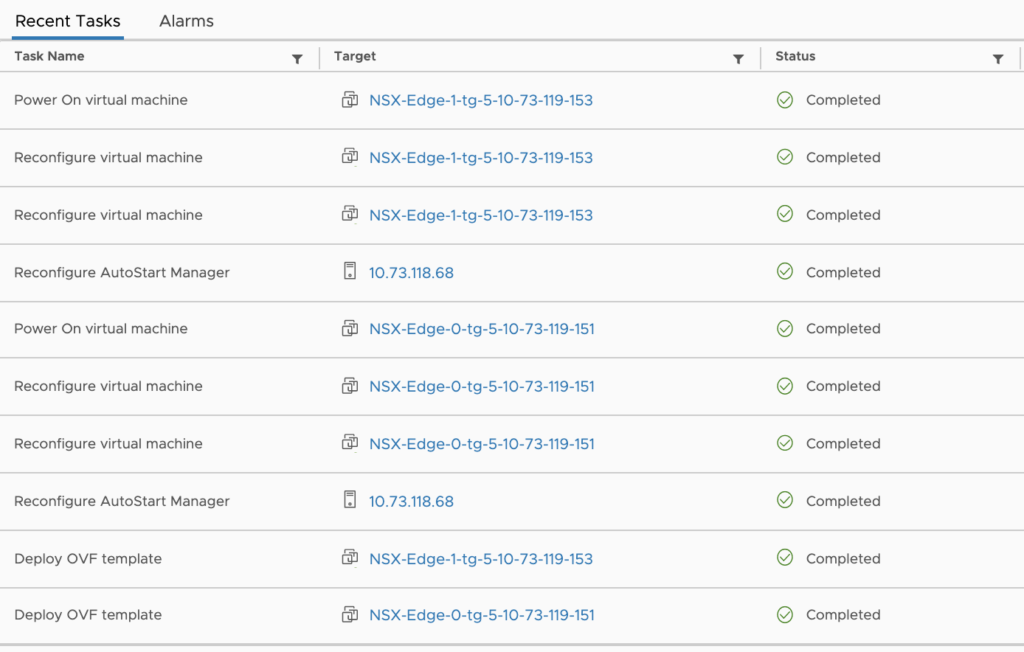

First I have deployed the Monkey Island VM from the OVA downloaded from the Infection Monkey website. This is an Ubuntu Linux VM with a small footprint of only 2 vCPU and 2GB of RAM.

Once it’s been installed, I have just started the VM.

My VM is up and running very quickly and I can connect to it from the web console on port 5000:

Once I am logged in with the default username: monkeyuser and password, I can setup the system.

I start by clicking on Configure Monkey.

I need to click the Network tab, and Change the Target list IP address with the IP address of the OWASP VM running in the App segment (172.11.11.115).

Then I clicked on the Run Monkey on the left and Select From Island.

At that moment the tool launches the exploits automatically.

Launching the attacks and exploits

With Kali Linux tools

In my environment, the Kali Linux server address is 172.11.11.107.

And the OWASP Broken Web Application has the following address: 172.11.11.115.

In this first stage, I started to use Kali Linux with nmap to scan the OWASP Web server.

Is this next step, I have leveraged the nikto command to scan for vulnerabilities on the server.

The result of the exploit is visible now on the CSP Console as you can see on the screen below. At the top, you can see there is representation of the attempt to compromise the server and they are spread over a time range with a slider that can be changed as needed.

The attacks have triggered a lot of Emerging Threats (ET Scan) alerts with Medium, High and Critical severity levels.

When I click on the VMs Affected, a list of the VM that have been affected by the vulnerabilities displays:

In addition, clicking the purple bar allow for displaying a detail window:

With Monkey Island tools

As I said before the scanner starts automatically after finishing the setup. Once it has finished its scanning operations, Monkey Island shows a map with all the results accessible through a web page.

It also displays a map of the devices that have been scanned by the tool.

On the right of the page, there is a tab called ATT&CK report that helps understand the exploits that have successfully been used or tried.

On the VMC on AWS Console, the results are displayed the same way as before with the Kali Linux tool:

Conclusion

This new Advanced Firewall Add-on IDS/IPS feature is really interesting as today it’s the only way to prevent attacker from exploiting vulnerabilities from inside the SDDC.

That’s conclude the post, I hope this has given you a better understanding on how this feature is powerful.