The latest M12 release of SDDC (version 1.12) came with a lot of interesting storage features including vSAN compression for i3en, TRIM/UNMap (I will cover it in a future post) as well as new networking features like SDDC Groups, VMWare Transit Connect, Time-based Scheduling of DFW rules and many more.

One that typically stands out for me is the Multi-Edge SDDC capabilities.

Multi-Edge SDDC (or Edge Scaleout)

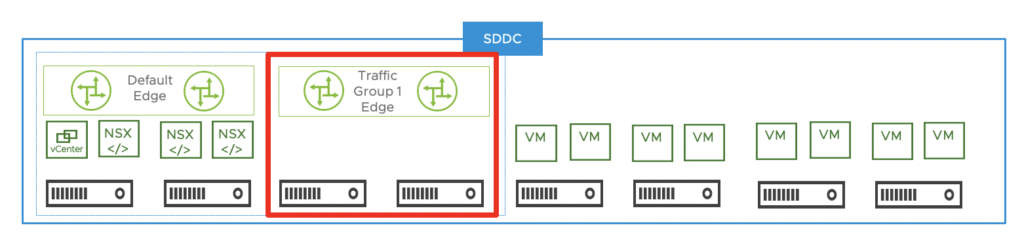

By default, any SDDC is deployed with a single default Edge (actually this a pair of VMs) which size is based on the SDDC sizing (Medium size by default). This edge can be resized to Large when needed.

Each Edge has three logical connections to the outside world: Internet (IGW), Intranet (TGW or DX Private VIF), Provider (Connected VPC). These connections share the same host Elastic Network Adapter – ENA and it’s limits.

In the latest M12 version of VMC on AWS, VMC is adding Multi-Edge capability to the SDDC. This gives the customer the ability to add additional capacity for North-South network trafic by simply adding additional Edges.

The goal of this feature is to allow multiple Edge appliances to be deployed, therefore removing some of the scale limitations by:

- Using multiple host ENAs to spread network load for traffic in/out of the SDDC,

- Using multiple Edge VMs to spread the CPU/Memory load.

In order to be able to enable the feature, additional network interfaces (ENA) are going to be provisioned in the AWS network and additional compute capacity are created.

It’s important to mention that you do need additional hosts in the management clusters of the SDDC to be able to support it. So this feature is coming with an additional cost.

Multi-Edge SDDC – Use Cases

The deployment of additional Edges allow for an higher network bandwidth for the following use cases:

- SDDC to SDDC connectivity

- SDDC to natives VPCs

- SDDC to on-premises via Direct Connect

- SDDC to the Connected VPC

Keep in mind that for the first three, VMWare Transit Connect is mandatory to allow the increased network capacity by deploying those multiple Edges. As a reminder, Transit Connect is a high-bandwidth, low latency and resilient connectivity option for SDDC to SDDC communication in an SDDC groups. It also enables an high bandwidth connectivity to SDDCs from natives VPCs. If you need more information on it, my colleague Gilles Chekroun has an excellent blog post here.

Multiple Edges permits to steer certain traffic sets by leveraging Traffic Groups.

Traffic Groups

Traffic Groups is a new concept which is similar in a way to the source Based Routing. Source based routing allow to select which route (next hop) to follow based on the source IP addresses. This can be an individual IP or complete subnet.

With this new capability customer can now choose to steer certain traffic sets to a specific Edge.

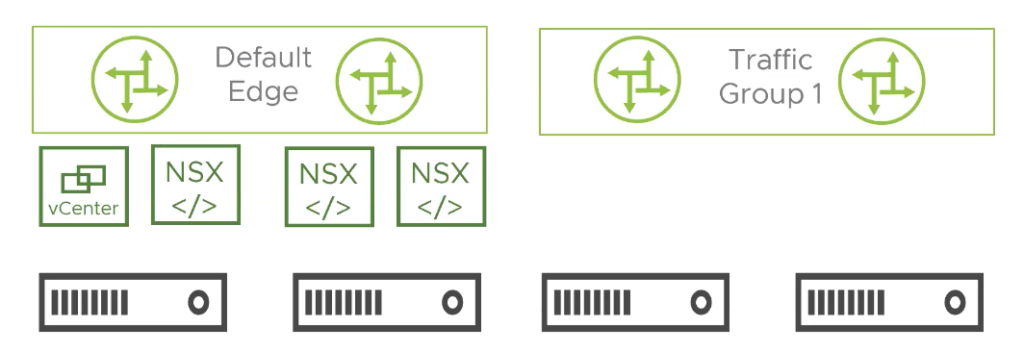

At the time you will create a traffic group, an additional active edge (with a standby edge) is going to be deployed on a separate host. All Edge appliances are deployed with an anti-affinity rule to ensure only one Edge per host. So there need to be 2N+2 hosts in the cluster (where N=number of traffic groups).

Each additional Edge will then handles traffic for its associated network prefixes. All remaining traffic is going to be handled by the Default Edge.

Source base routing is configured with prefixes defined in prefix lists than can be setup directly in the VMC on AWS Console.

To ensure proper ingress routing from AWS VPC to the right Edge, the shadow VPC route tables are also updated with the prefixes.

Multi-Edge SDDC requirements

The following requirements must be met in order to leverage the feature:

- SDDC M12 version is required

- Transit Connect for SDDC to SDDC or VPC or SDDC to on-prem

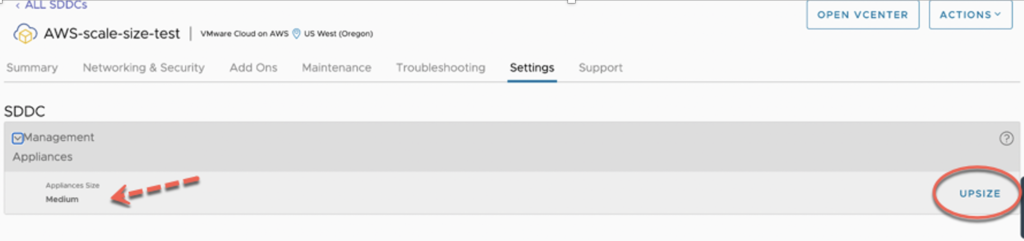

- SDDC resized to Large

- Enough capacity in the management cluster

A Large SDDC means that management appliances and Edge are scaled out from Medium to Large. This is now a customer driven option that doesn’t involve technical Support anymore as it’s possible to upsize an SDDC directly from the Cloud Console.

Large SDDC means an higher number of vCPUs and memory for management components (vCenter, NSX Manager and Edges) and there is a minimal 1 hour downtime for the upscaling operations to finish, so it has to be planned during a Maintenance Window.

Enabling a Multi-Edge SDDC

This follow a three step process.

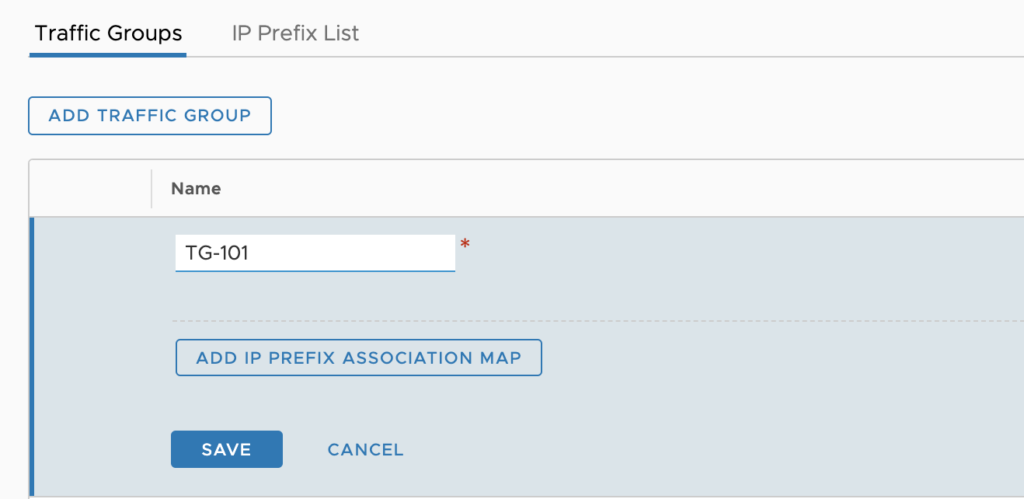

First of all, we must define a Traffic Group that is going to create the new Edges (in pair). Each Traffic group creates an additional active/standby edge. Remember also the “Traffic Group” Edges are always Large form-factor.

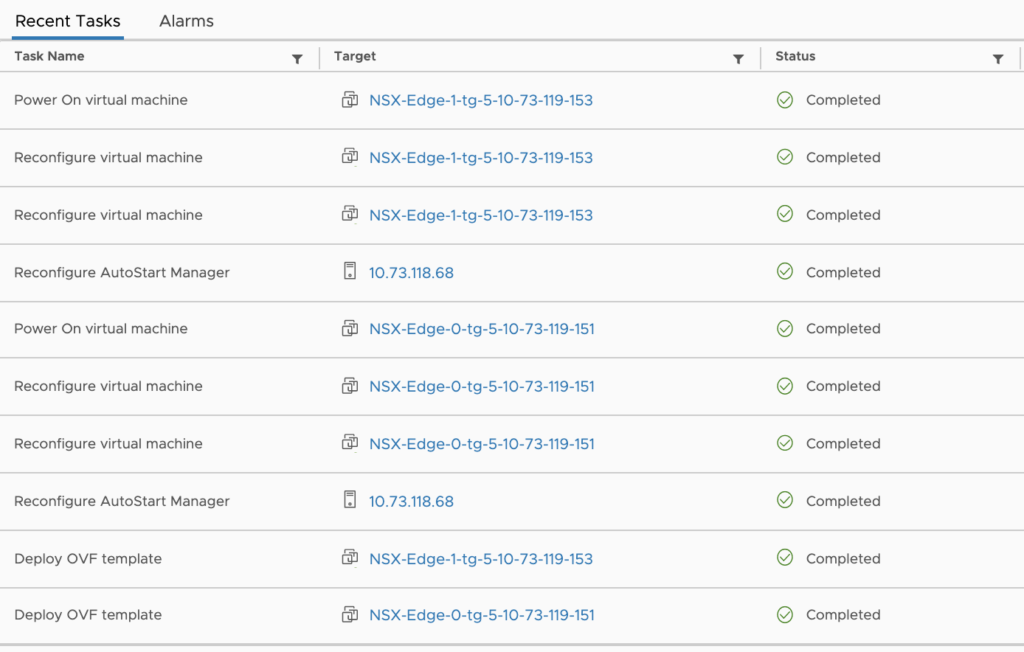

Immediately you will see that 2 additional edge Nodes are going to be deployed. The New Edges have a suffix name with tg in it.

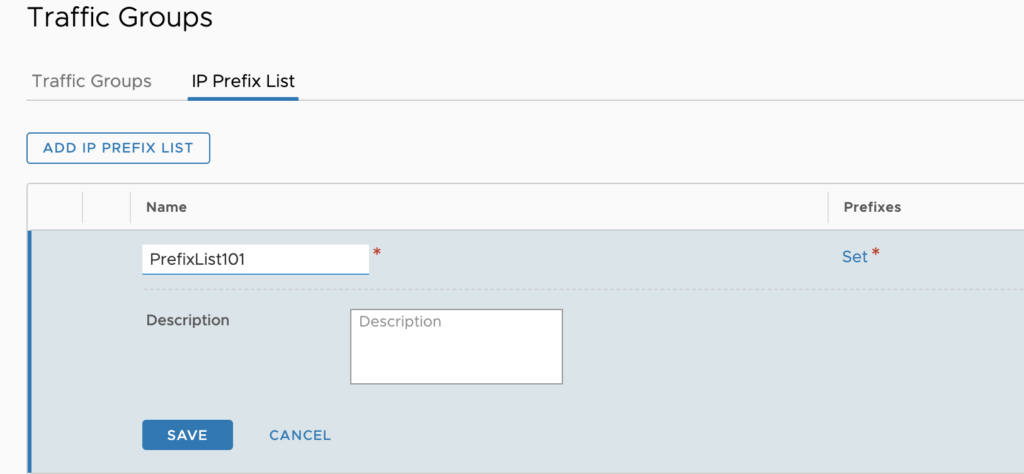

Next step you have to define a prefix list with specific prefixes and associate a Prefix List. It will contain the source IP adresses of SDDC virtual machines that will use the newly deployed Edge.

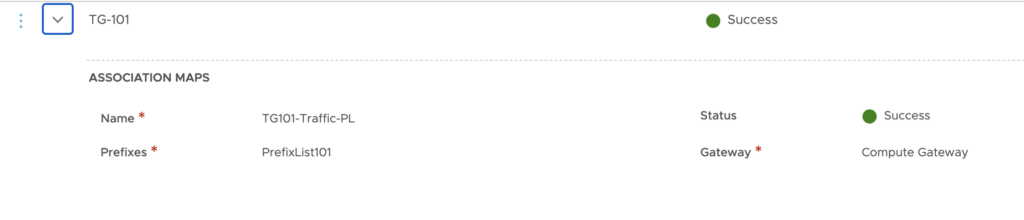

After some minutes, you can confirm that the Traffic groups is ready:

NB: NSX-T configures source based Routing with the prefix you define in the prefix list on the CGW as well as the Edge routers to ensure symmetric routing within the SDDC.

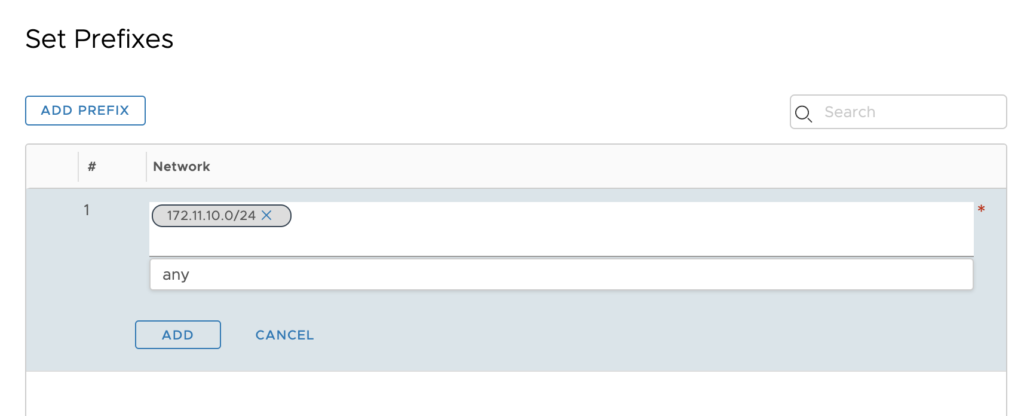

You just need to click on Set to enter the prefix list. Enter the CIDR range, it could a /32 if you just want to use a single VM as a source IP.

NB: Up to 64 prefixes can be created in a Prefix List.

When you done entering the subnets in the prefix list, Click Apply and Save the Prefix List to create it.

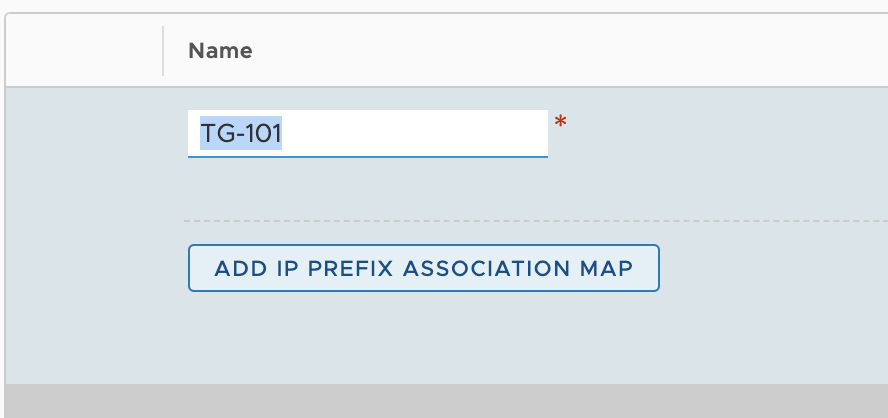

Last step is to associate the Prefix List to the Traffic Group with Association Map. To do so click on Edit.

Basically we now need to tell what prefix list to use for the traffic group. Click on ADD IP PREFIX ASSOCIATION MAP:

Then we need to enter the Prefix List and give a name to the Association Map.

Going forward any traffic that matches that prefix list will be utilising the newly deployed Edge.

Monitoring a Multi-Edge SDDC

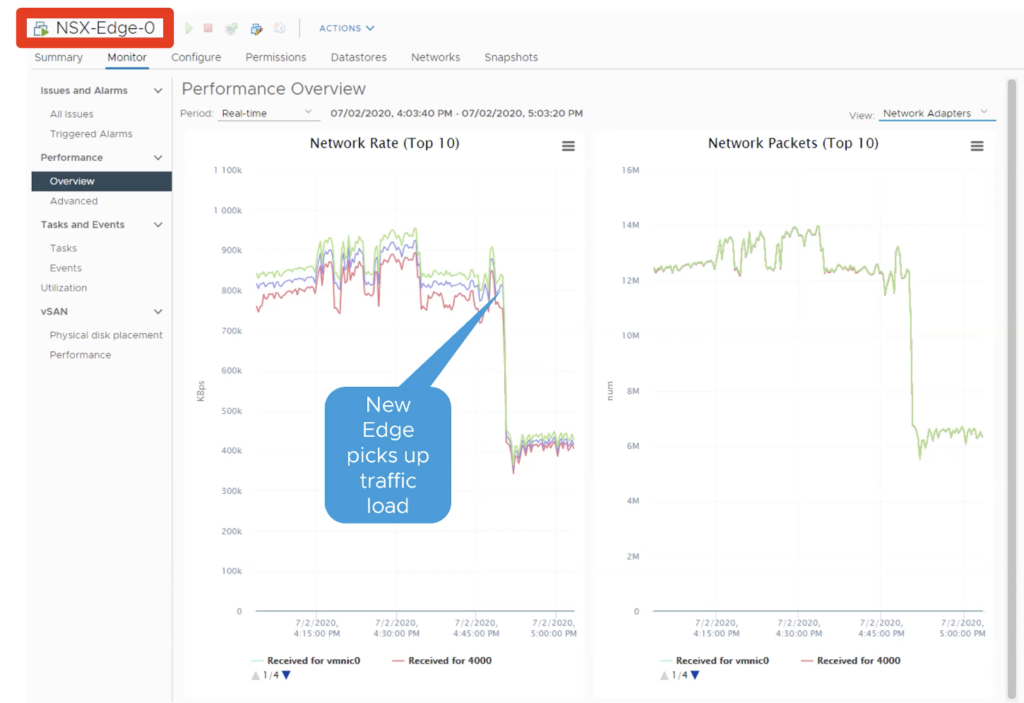

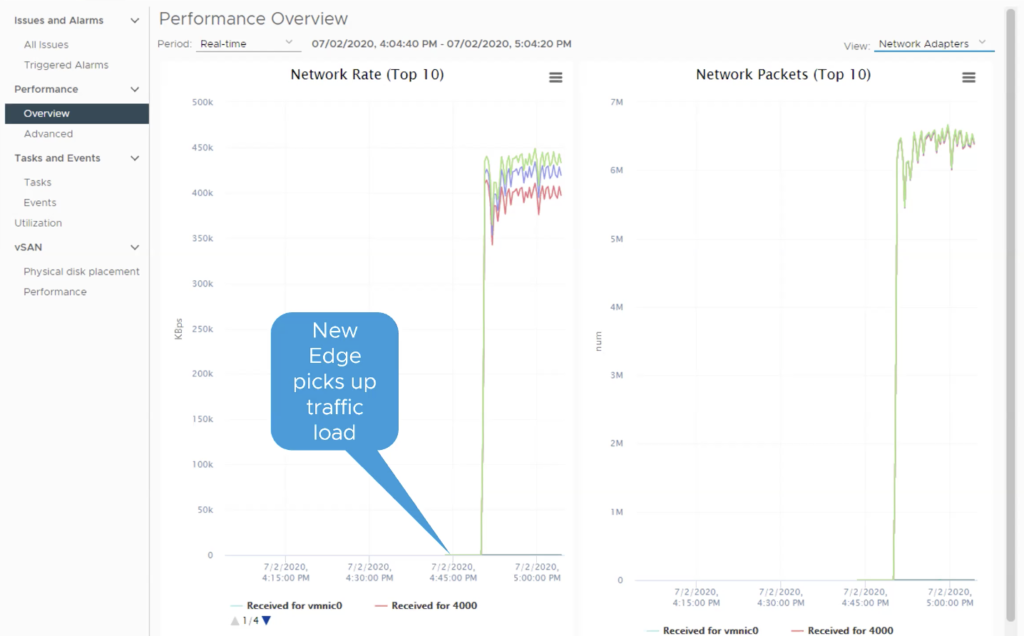

Edge nodes cannot be monitored on VMC Console but you can always visualise the Network Rate and consumption through the vCenter web console.

When we look at the vCenter list of Edges, the default Edge has no “-tg” in its name. So basically the NSX-Edge-0 is the default. As long as we add the new traffic group, the traffic is going to manage the additional traffic and liberate the load on this default Edge.

The NSX-Edge-0-tg-xxx is the new one and we can see an increase on this new Edge in the traffic consumption on it now because of the new traffic going to flow over it:

What happened also after the new scale edge is deployed, is that the prefix list is using the new Edge as its next-hop going forward. This is also propagated to the internal route tables from Default Edge as well as CGW route table.

All of these feature is exposed over the API Explorer, for the traffic Groups definition in the NSX AWS VMC integration API. Over the NSX VMC Policy API for the prefix list definition perspective.

In conclusion, remember that Multi Edge SDDC doesn’t increase Internet capacity nor VPN or NAT capacity. Also that there is a cost associated to it because of the additional hardware requirements.